Chaos and discipline, These two words are Oxymoron, you might be thinking, How Chaos can make Disciplined Microservices?

But the universal truth is discipline means the absence of Chaos, so until you have not experienced chaos you can not be disciplined.

If we think about the Law of Entropy, then Chaos is the high entropy state, and a discipline is the low entropy state. Always disciplined services degrade to chaotic ones to make the system in equilibrium, as the flow of the direction is from high(Chaos) to low entropy(discipline) state. So chaos is inevitable.

Now, If we want to make sure our services remain in a low entropy state(discipline) throughout, we need to adopt a few special techniques. as per the Law of physics, this is an irreversible process(Flow from low to high entropy state), it is going against entropy we called it reverse entropy (watch Christopher Nolan Masterpiece TNET!!!)Refrigerator is a reverse entropy object(doing cooling), Crux is to maintain discipline in your services we need to adopt a Resilience strategy but the question is How to determine what resilience strategy needs to be adopted? For that, we have to experience Chaos in production and act accordingly.

This is the essence of Chaos engineering, by injecting mild fault into the system to experience the chaos and take preventive measures and self-healing against it.

Today I am talking about implementing chaos in production!!!!

After hearing this you might think what I am saying? Am I insane? I am encouraging implement chaos in production, which is the most emotional and sensitive area of a developer, We are all praying whatever the error comes, please those come before Production, In production, if something goes wrong your organization reputation at stake, your organization loose user base, Revenue, etc and I am encouraging to implement Fault/Chaos.

But the Irony is we are having the wrong mindset, our mindset should be “Failure is inevitable and we must prepare for it”. In this tutorial, I am advocating for this Culture.

A simple Microservice definition::

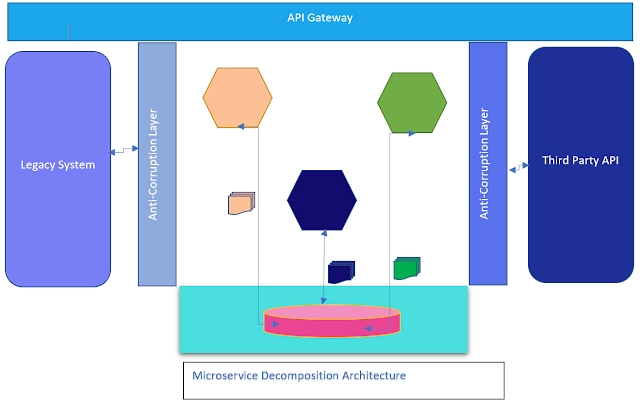

Microservice Architecture is distributed in nature and it consists of suites of small services which can be scaled and deployed independently.

If we deduce the above statement we will find three important things.

As Microservice is distributed, it is communicated over the network, and the network is unreliable so How come your Microservice will be reliable?

Over the network, Microservices are communicated to each other so they are dependent on each other, so they can fail if their dependent services fails.

Microservices Scaled and deployed on infrastructure so if infrastructure fails your Microservices will fail.

These points justify the “Failure is inevitable and we must prepare for it” statement.

But the question is How do we prepare for it?

The Answer is Chaos Engineering.

What is Chaos Engineering?

Chaos Engineering is a technique by which you can measure the resilience of your architecture. By Chaos Engineering we will inject Fault(Increase load, inject delay), and then we will check How the services react, how resilient the service is? We called it FIT (Failure Injection Testing) If the service is not resilient we will identify it and make the service resilient so it can handle real-time error in production.

Image courtesy : Netflix

Dependency, Resilience, and Chaos Engineering

Chaos in dependency

Microservice are calling each other to fulfill a business capability, so microservice dependency is a major factor, many types of failures we can predict like, dependent Service is not available, service not in a state to receive the request, one service fails as a cascading effect whole microservice service chain fail and crash, distributed cache unavailability, cache memory crash, single point of failure.

We will talk about the resilience strategy for all the above cases.

Resilience Technique::

Hystrix is a Netflix OSS tool by which we can implement Circuit breaker patterns in the services, so if one dependent service fails for several requests it is better to not call it and take a default route so that the whole Microservices calling chains, not breaks and the user experience not getting stopped.

Another Resilience technique is to identify the critical services which are the heart of the Business and make sure if other services fail these services can run and can give users a minimal experience to carry on rather than halting the whole user experience.

In Real-time architecture we don’t always call the persistence layer it creates latency also all business features are not stateless we need shared data across multiple microservices so we are using cache techniques and do some sorts of orchestration in our code where if a request comes then we first check cache then data not available we call persistence layer and add the result to the cache for further requests.

Now, If Cache fails or acts as a single point of failure our services will fail, to avoid the same, we are using distributed caching with replication and the data must be replicated to different available zones so if one zone fails data can be retrieved for another available zone.

We need to adopt a Multi-region strategy for the persistence layer as well Microservices, If your Business spread over the Geography then as per architectural style we must have different data centers over the Geography say US North, US East, Asia Pacific, Europe etc.

Now the interesting thing is if your one data center serves one region say Asia pacific serves Asia, now if that datacenter goes down your all Asia pacific users affected so we must have Multi-region and failover strategy so if one region goes down it's user request can be shared by another region to have a resilience system.

Chaos Testing::

To check Service is unavailable to introduce latency in the call to see how services react, we can use the Chaos Monkey framework and chaos toolkit to achieve that testing.

To check whether your critical services are working or not you can blacklist other services and only white-listed critical services to see how it reacts when only critical services are up.

We can use Chaos Monkey and Chaos Gorilla to kill random nodes to see how services react assuming service multiple instances deployed in multiple nodes.

We have Chaos Kong which can takedown the entire region to check the Multi-region Failover strategy.

Scaling, Resilience, and Chaos Engineering::

Chaos in Scaling::

In Microservices we generally adopt XYZ axis scaling. Now in Scaling, we can face many types of issues like Zone unavailability, Server affinity, Sticky sessions, cache unavailability, etc.

Resilience Technique::

Generally, when we build a Microservices / distributed architecture, although we are saying to build stateless microservices it does not always happen we have to maintain statefulness due to business requirements like cart functionality, so we are adopting many techniques like server affinity or sticky session, once a request comes to a node load balancer makes sure that users further request will be processed by that node only, but this technique has some downsides, although your system is distributed you have multiple instances if that particular node goes down all the users served by that node will experienced error, which is not expected.

So we need to make sure our services should not have any server affinity. We can use distributed cache to store the stateful data or session also we can use request payload to append the state so data are available in the whole request cycle, which has been populated by different microservices.

In the case of cache also as I mentioned earlier data needs to be replicated in multiple regions if not then if one cache region went down all the users whose data stored by that region will be impacted, if we have replication, data can be available from other areas.

Another important thing is when we are adopting Horizontal scaling we must have to make sure it can auto-scale based on the load, we need to treat each node as cattle, not like pets, so if one node malfunctioned we can kill that node(I am against animal cruelty) and spawn another one automatically. Now If your services have server affinity or Load balancer works on sticky session strategy auto-scaling is not possible, so fix the same. Your organization's infrastructure must be capable of self-healing and spawning new nodes based on the load. So it is advisable to use containerization and container orchestrators like Kubernetes, Docker swarm to handle this.

Chaos Testing::

To check whether your service has server affinity or not, you can randomly kill nodes by Chaos Monkey and chaos tool kit to see the outcome.

Infrastructure, Resilience, and Chaos Engineering

Chaos in Infrastructure::

While adopting Microservices you have to have strong infrastructure support, most of the organizations either use cloud providers or they have their internal cloud or own data centers whatever the case, your Microservices architecture depends on Infrastructure if infrastructure fails you Microservice also fails. So we must think about infrastructure failure and design our architecture in such a way we can mitigate the failure.

Resilience Technique::

For infrastructure, we must need to have a Multi-Region strategy and failover mechanism, so that if one region's infrastructure goes down another region can serve that region's users.

Also, we need to choose Data center smartly so that two data center physical distance is not too much if it is so network hoping time increases so response time increases, but they Physically must be far apart so that natural calamities, Terrorist attack occur it should not impact both data centers.

Chaos Testing::

Using Chaos Kong we can take down the entire region to see how failover works.

- You must have a well-designed DevOps Pipeline where you can test Chaos engineering roughly 1% load to flow to Chaos engineering Path to test how your system reacts.

- You need to have well-designed container orchestration techniques, where you can manage the containers, autoscaling, failover, networking rule, etc

- Chaos engineering is meant for a complex business system, if your system is simple enough don’t adopt chaos engineering it will increase the overhead and cost of your business.

- After adopting Chaos engineering you must conduct the Gameday concept, the day when you do the Chaos testing in productions and it has to be conducted at a regular interval.

- You need to have automation platforms and implement the chaos quality gates, which do all types for failure testing. If your team has to do it manually so then in the long run the team will lose interest and it will be out of control.

- If you identify a new failure in production during chaos engineering you need to terminate the test and reroute it to the original route so the user does not experience any error.

- In case of new bugs identified in chaos testing, the team must need to do RCA of that and try to solve it and then find a way to automate it and assimilate it in chaos quality gates.

- The organization must have a Chaos Checklist, every service needs to pass that checklist then only it will be promoted to production.

- Chaos engineering in production is risky if your team is not skilled enough, it will be better to start with lower environments and once the team acquired the skill then try to do it in Production.

- While testing in production it is important to minimize the blast radius, unnecessary giving pain to customers is not a good way to experimenting chaos, so the chaos engineering team must ensure to keep the experience in the minimal blast radius and fall back to the original route if something went wrong.